Explore Data Mining: How to Use Proxies Judiciously

In today's data-driven world, the skill to collect and process information swiftly is essential. As companies and users increasingly depend on web scraping for competitive analysis, comprehending the importance of proxies becomes necessary. Proxies enable users explore the web anonymously and access content avoiding being limited by location restrictions or usage caps. However, with a multitude of proxy choices available, knowing how to select and utilize them wisely can make all the impact in the success of your data collection efforts.

This piece investigates the complexities of proxy employment, from acquiring free proxies to employing advanced proxy checkers for authentication. We will explore the top tools for scraping and managing proxies, including features like performance evaluation and privacy tests. Additionally, we will analyze the variability between various types of proxies, such as HTTP, SOCKS4a, and SOCKS5, as well as the distinctions between shared and private proxies. By the end of this resource, you will be equipped with the information to utilize proxies wisely, ensuring that your data extraction initiatives are both effective and trustworthy.

Understanding Proxies: Categories and Uses

Proxies serve as bridges between a client's device and the destination server, providing various functionalities based on their type. One common type is the Hypertext Transfer Protocol proxy, which is designed for handling web data flow and can support activities like content screening and storing. These proxies are commonly used for tasks like web data harvesting and navigating the internet privately. On the other hand, SOCKS proxies are more versatile and can manage any type of traffic, such as TCP and UDP, making them appropriate for a variety of applications beyond just web surfing.

The decision between different types of proxy servers also relies on the level of anonymity needed. HTTP proxies might offer restricted anonymity, as the source IP address can occasionally be exposed. SOCKS4 and SOCKS5 servers, however, provide enhanced privacy features. SOCKS5, in particular, offers authentication and works with UDP standards, making it a favored option for use cases requiring elevated anonymity and performance, such as online gaming or streaming platforms.

When using proxy servers, understanding their specific use cases is critical for achieving the desired outcome. For instance, web data extraction projects usually benefit from fast proxies that can bypass restrictions and ensure reliable access to target sites. Additionally, automating tasks often demands trustworthy proxy providers that can handle multiple requests without compromising speed or data integrity. Selecting the right type of server based on these requirements can greatly enhance the effectiveness of data extraction efforts.

Anonymous Scraping: Resources and Methods

When immersing into proxy harvesting, choosing the appropriate tools is essential for effective information extraction. Proxy extraction tools are vital for collecting lists of proxies, and numerous options satisfy different needs. Costless proxy scrapers offer a solid initial point for beginners, while quick proxy scrapers ensure that individuals can operate efficiently without significant latency. Tools like ProxyStorm offer a simplified way to collect proxies and test their effectiveness, making them valuable resources for internet data extraction projects.

Once proxies are obtained, verifying their functionality is equally crucial. The top proxy checker utilities perform extensive tests to verify that proxies are working as expected. These validation tools often assess parameters like speed and anonymity, helping individuals avoid slow or unreliable proxies. Options like SOCKS proxy checkers differentiate between tightly integrated options, catering to different scraping scenarios while maintaining a robust performance.

To enhance the utility of proxies, understanding the distinctions between different types is crucial. HTTP, SOCKS4, and SOCKS5 proxies fulfill different purposes in web scraping. HTTP proxies are often used for simple tasks, while SOCKS proxies offer greater flexibility and performance for more intricate automation. By leveraging the appropriate tools to scrape proxies and grasping their specifications, individuals can greatly improve their data harvesting efforts and navigate the web efficiently.

Paid vs. Paid Proxies: What to Choose

When evaluating proxies for data harvesting and web scraping, a key of the primary decisions is if to use complimentary or premium proxies. Complimentary proxies are easily accessible and generally require no payment, making them an appealing option for occasional users or those new to the field. Yet, they often come with drawbacks such as slower speeds, increased downtime, and less reliability. Moreover, complimentary proxies are often shared among multiple users, which can lead to issues with speed and anonymity, compromising the effectiveness of your web scraping efforts.

Conversely, premium proxies are typically more reliable and offer superior performance. They commonly come with dedicated IP addresses, which significantly enhance both speed and anonymity. This reliability is crucial for businesses or users who depend on data extraction to operate effectively. Paid proxy services usually offer extra benefits such as location-based targeting, enhanced security protocols, and technical assistance, making them a preferred option for serious data extraction tasks and automation processes.

In the end, the decision between complimentary and paid proxies depends on your particular needs and usage scenario. If you are involved in casual browsing or low-stakes scraping, complimentary proxies might be sufficient. However, for high-volume web scraping, automation, or tasks that require guaranteed uptime and security, opting for a high-quality paid proxy service is often the wise choice.

Assessing and Verifying Proxies

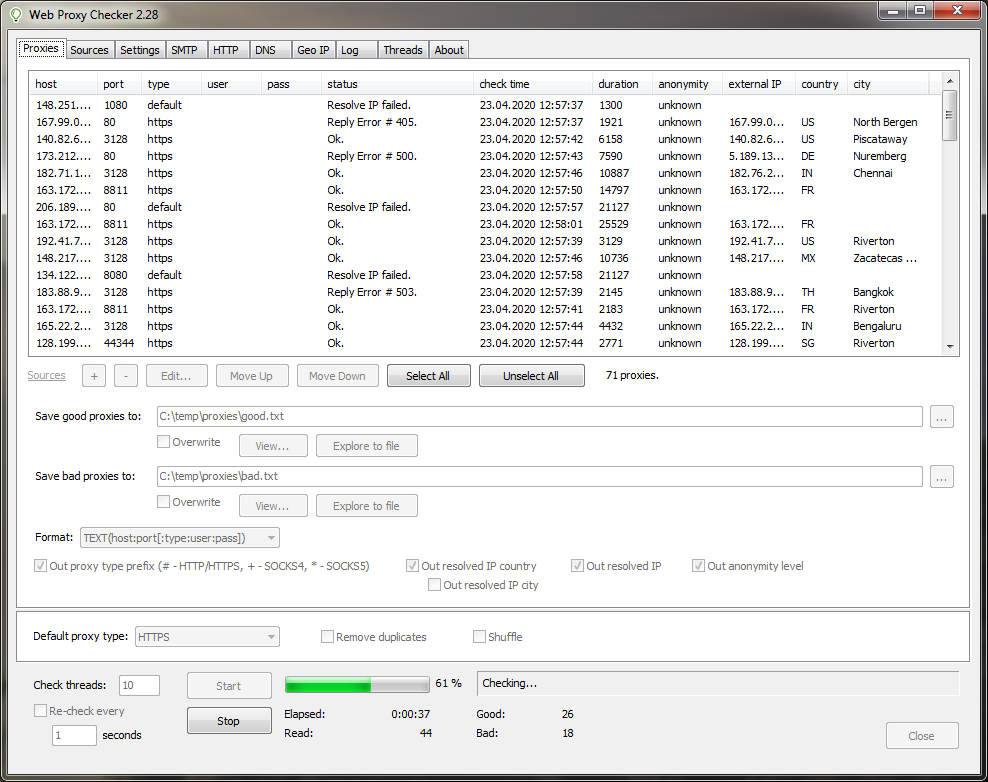

As using proxy servers, evaluation and validation are vital steps to make sure they function properly and satisfy your requirements. A solid proxy checker can save you effort by highlighting which proxy servers in your collection are working and which are down. Various tools, such as Proxy Checker, enable you to assess multiple proxies simultaneously, checking their latency, anonynmity, and protocol. This procedure ensures that your data extraction efforts are not obstructed by non-working or poor proxies.

An additional key aspect is verifying proxy speed. Fast proxies are essential for effective data gathering, especially when scraping websites that implement rate limits or other methods against high-volume requests. Tools that check proxy speed can help you discover top-performing proxies that deliver fast and reliable connections. Additionally, knowing the distinction between HTTP, SOCKS4 proxies, and SOCKS5 can assist your selection of proxies based on the particular needs of your scraping project.

Ultimately, testing for proxy concealment is essential for maintaining confidentiality and avoiding detection. Levels of anonymity can differ between proxies, and using a tool to check if a proxy is clear, anonymous, or high anonymous will help you ascertain the extent of protection you have. This process is particularly significant when collecting competitive data or sensitive information where being detected can lead to blocking or legal issues. By using thorough proxy evaluation and validation methods, you can ensure optimal performance in your data gathering tasks.

Proxy for Web Scraping

Proper proxy management is vital for successful web scraping. It ensures make certain that your scraping activities remain undetected and efficient. By using a scraper for proxies, you can gather a varied array of proxies to spread your requests. proxy list generator online of requests across multiple IP addresses not only reduces the chances of being blocked but also enhances the speed of data extraction. A well-maintained proxy list allows you to switch proxies frequently, which is necessary when scraping data from sites that monitor and limit IP usage.

In addition to employing a proxy scraper, you should utilize a trusted proxy checker to check the health and performance of your proxies. This tool can evaluate for speed, anonymity levels, and reliability, making sure that the proxies in use are suitable for your scraping tasks. With the appropriate proxy verification tool, you can filter out slow or poor-quality proxies, thus maintaining the efficiency of your web scraping process. Frequent testing and updating your proxy list will help in keeping your operations smooth and continuous.

When it comes to selecting proxies for web scraping, think about the differences among private and public proxies. Private proxies offer higher speed and security, making them an ideal choice for specific scraping jobs, while public proxies are generally slower and less reliable but can be used for less intensive, less intensive tasks. Knowing how to locate high-quality proxies and manage them effectively will make a significant difference in the quality and quantity of data you can extract, ultimately boosting your results in data extraction and automation tasks.

Best Practices for Using Proxies

As you using proxies in data extraction, it is crucial to choose a trustworthy proxy source. Complimentary proxies may seem inviting, but they generally come with risks such as slow speed, recurring downtime, and likely security vulnerabilities. Opting for a subscription-based proxy service can provide more reliability, higher quality proxies, and better anonymity. Look for services that supply HTTP and SOCKS proxies with a strong reputation among web scraping communities, ensuring you have the best tools for your projects.

Regularly testing and verifying your proxies is crucial to keep their efficacy. Employ a reputable proxy checker to assess the speed, reliability, and anonymity of your proxies. This way, you can figure out which proxies are performing optimally and remove those that do not fulfill your performance standards. Conducting speed tests and assessing for geographic location can further help you tailor your proxy usage to your specific scraping needs.

Finally, understand the various types of proxies on the market and their respective uses. HTTP, SOCKS4, and SOCKS5 proxies serve distinct purposes, and knowing the distinctions is necessary for effective web scraping. For example, while SOCKS5 proxies accommodate a wider range of protocols and provide more adaptability, they may not be required for every single tasks. Knowing your specific requirements will help you optimize your proxy usage and ensure effectiveness in your data extraction efforts.

Automation plus Proxy Solutions: Boosting Productivity

In today's fast-paced digital landscape, the demand for efficient automation in data extraction is essential. Proxies play a crucial role in this process by allowing users to handle multiple requests simultaneously without raising red flags. By utilizing a trustworthy proxy scraper, you can gather a vast variety of IP addresses that help spread your web scraping tasks, significantly reducing the risk of being frozen by target websites. This method not only speeds up data collection but also affirms that your scraping activities keep under the radar.

Implementing a solid proxy verification tool is crucial to maintaining the effectiveness of your automation efforts. A best IP checker allows you to filter out non-functional proxies efficiently, ensuring that only reliable IPs are in your rotation. The verification procedure should consist of checking proxy speed, privacy levels, and reply times. By frequently testing your proxies and discarding low-performing ones, you can maintain optimal performance during your scraping tasks, leading to faster and more reliable results.

To boost efficiency further, consider integrating SEO tools with proxy functionality into your automation workflows. This can improve data extraction capabilities and provide information that are invaluable for competitive analysis. Tools that scrape proxies for complimentary can be beneficial for cost-effective solutions, while purchasing private proxies may result in better performance. Finding a balance between the use of private and public proxies and continuously monitoring their effectiveness will allow your automation processes to flourish, ultimately improving the quality and speed of your data extraction efforts.